On using LLMs (Large Language Models) like ChatGPT to get things done

(This image is licensed by me, Isaak Tsalicoglou and my OVERBRING brand, as Attribution-ShareAlike 4.0 International (CC BY-SA 4.0). Feel free to share and adapt, as long as you maintain the license, provide a link to the license, give me appropriate credit, and indicate if changes were made.)

Note: “ChatGPT” is used here to denote the publicly freely available version of OpenAI’s chat interface with an LLM. However, you can also substitute other similarly advanced Large Language Models in the text below.

The luddite’s (?) worry

Some worry that using LLMs (ChatGPT in particular) will hold one back from building their own understanding of things while learning. The reasons given are two-fold:

- You cannot query an LLM for knowledge because it will “hallucinate” a response that might be entirely false. Therefore, you might be feeding your brain with inaccuracies.

- Relying on an LLM explaining you things, even if it were accurate, will worsen your learning and retention because you will not have relied on perseverance to deeply grasp what it is you’re learning.

Therefore, the conclusions that sometimes follow are:

- Don’t use LLMs at all.

- Only use traditional means of learning (books, courses, etc.)

- Don’t use ChatGPT in a report, because it degrades your work’s value; otherwise you would not hesitate to add “ChatGPT was used in the preparation of this report”.

I think the problem here is a false dichotomy, and a misunderstanding of what LLMs are good for.

(There’s also another problem, that of believing that learning must be arduous to “stick”, but this has already been proven wrong countless times. I only need to point you to Khan Academy to disprove this belief.)

What are LLMs good for? Not information recall.

An LLM is not a fountain of knowledge, because its output cannot be trusted. However, it can help you summarize inputs, reformulate, create metaphors that help you understand, distill key points, etc. It is excellent at summarization and reformulation, i.e. at things that require your input, assuming your input is factually accurate. And you still might want to quality-assure the output, regardless.

In this summarization/reformulation use case, ChatGPT can be an excellent tool that enhances learning and understanding immensely. For example, you can give ChatGPT an excerpt from a website or textbook explaining something complex and ask it to rewrite it using a metaphor, or in a manner suitable for a 5, 10 or 15-year old. Or, you can ask ChatGPT to reframe an input and create an analogy, a metaphor, or anything else that will put your input in a context that is already familiar to you.

Does using an LLM degrade your work’s value? Not always.

It depends on the extent to which a tool (any tool) was used to prepare your work, e.g. a report. Did you “cheat on your homework” and pad your work with potential nonsense generated by an LLM, i.e. by using ChatGPT as a fountain of knowledge, that it clearly is not meant to be, due to the way it works? Or did you utilize ChatGPT’s aforementioned summarization/reformulation capabilities, which are excellent indeed, to “inspect” your work from various angles? Or to rephrase your text in various ways, such as making it more succinct, more punchy, less repetitive, etc.?

The former approach degrades your work’s value, indeed. The latter doesn’t. And yet, such “degradation” might not matter in the eyes of your work’s ultimate consumer.

I fully expect that numerous providers out there for months now arbitrage their clients’ lack of knowledge of ChatGPT’s existence to churn out garbage reports with the minimum effort. This is not different to e.g. a graphic designer charging a client $500 for a brand logo and paying 25 bucks across 5 vendors on Fiverr, thus reaping the rewards of the client being unaware of Fiverr’s existence. It’s arbitrage, plain and simple, and absolutely nothing new.

Yet, even if a client is aware of Fiverr, the willingness to pay a premium of $475 to the graphic designer might represent the value the client sees in letting someone else get it done, regardless of how it gets done.

If the “ChatGPT-enhanced” report is hot garbage without any discernment on behalf of its human compiler then yes, there is a problem. But it’s not a new problem, not at all.

If the report has been “informed” by interactions with ChatGPT and curated by the provider’s expertise, and is therefore valuable to the client, does the use of ChatGPT matter? Again, it will depend on the ultimate consumer of the report, but most likely it will not.

To flip it around: what is the client of your work paying for? For example, does the value of you presenting data-analytical insights get damaged by the obviousness that it would have been impossible or at least very arduous without the use of Excel?

Does the client pay for inputs, or for the expected value? Obviously, it depends on the client, and clients that pay for the latter pay better and are not petty penny-pinchers.

Does the buyer of a building pay for the tools used in its construction or for the end result and its quality? Actually, for both, for the latter one explicitly, which the former is implied to impact materially, though it is not always clearly how. Constructing a building with premium construction equipment by Hilti (a company I used to work for), for example, doesn’t automatically or directly make the building qualitatively better, though it might do so indirectly by enhancing the building process, making it easier, faster, etc.

It’s not the LLM that might degrade your work. It’s your inappropriate use of it. And it still might not matter.

When should you use ChatGPT? Ideally, when you know your stuff sufficiently to spot BS.

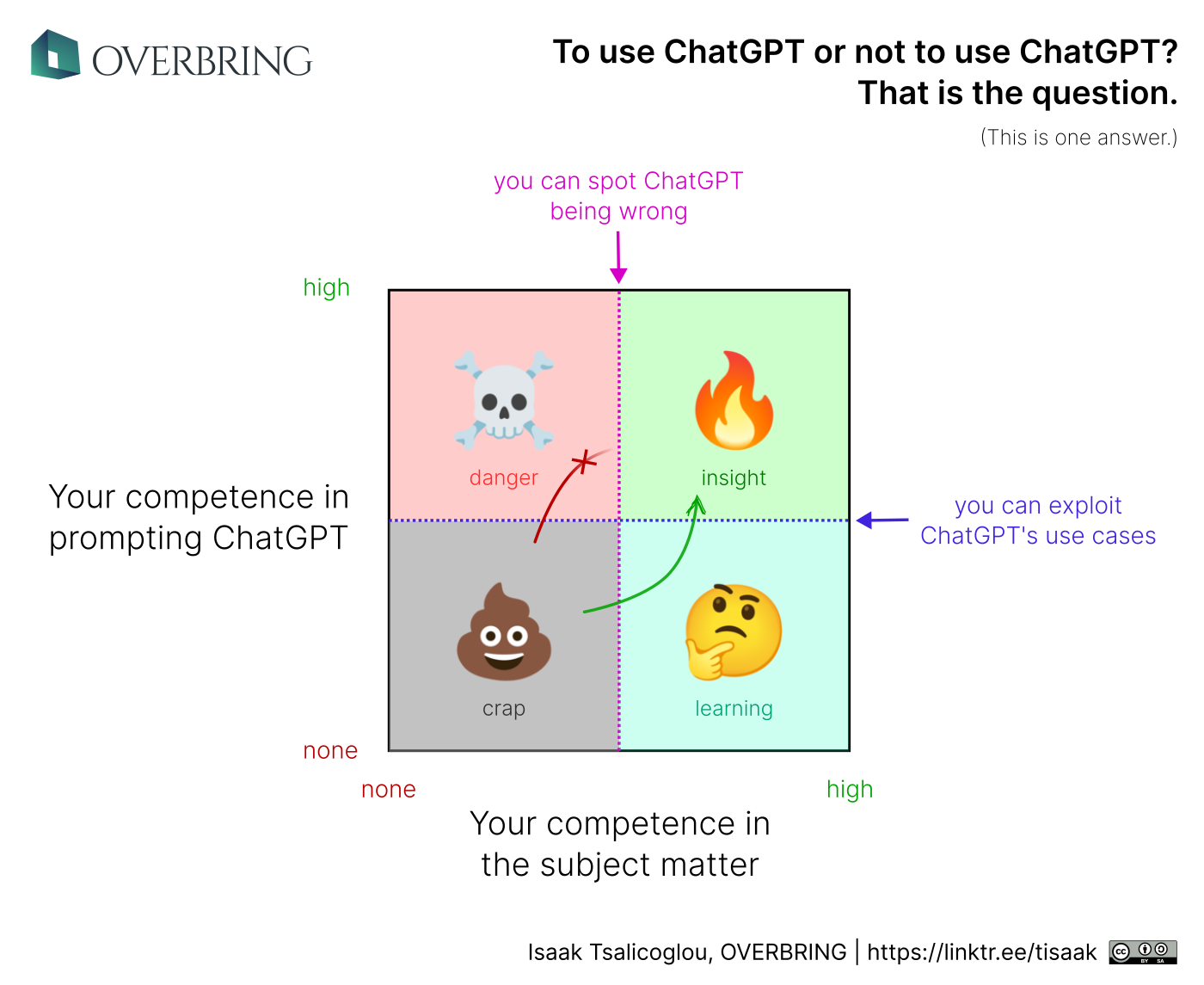

The question “to use ChatGPT or not to use it?” oversimplifies the problem. Add one more dimension to the model: the degree of your understanding of the subject matter you will be applying ChatGPT on.

Then you have a 2x2 matrix, which is still a massive simplification of reality, but offers a much more nuanced view on when you should feel safe to use ChatGPT productively, without the risk of getting fooled by what essentially is a statistical regurgitator/interpolator.

Four possible “zones”, therefore:

-

The crap zone: you use LLMs blindly, without sufficient competence in the subject matter. This is a terrible idea, as with any tool, from a metal file to a ground-penetrating radar. I don’t even know what this zone represents, beyond dabbling in a new technology without understanding what you might be getting out of it. Most likely, you will be getting crap out of it, without any ability of smelling it.

-

The danger zone: you use LLMs competently, yet without sufficient subject-matter competence. You will think you learn but learn little, without the ability to evaluate what’s being returned by the LLM. Similar, and very common scenario: data scientists without domain knowledge. Lots of energy expended, for output of questionable value, that you cannot even evaluate as usable or not. Pass. Skip. Avoid.

-

The learning zone: you have more than sufficient subject-matter competence to evaluate ChatGPT’s output as true or false, but are not yet skilled at prompting it so that you get good output. You will initially be disappointed by the output. However, if you persevere you will learn how to prompt the LLM better, so that you reduce “hallucination” or are at least able to identify it. Over time, you will find better ways to prompt and therefore to exploit the LLM’s core use cases of summarization/reformulation productively. Starting from this step, eventually you can reach…

-

The insight zone: you will be able to use ChatGPT competently, already having enough subject-matter competence to know whether you’re being fed “hallucinations”. Now we’re talking! That’s where you can have a discussion with ChatGPT on something you deeply know, and have the LLM summarize, reformulate, reframe, and explore. Eventually you will be as amazed as I was, when I used it on things I am fairly knowledgeable about.

How to start? Not from crap or danger.

How should you learn to use ChatGPT? Not by using it for something you are incompetent in. Skip the crap zone and start directly from the learning zone. Keep at it, trying different prompts. In my experience, here’s what works:

- Provide plenty of context.

- Use natural language, as if you are chatting with a person.

- Add modifiers in how you want the answer to come out (succinctly, extensive, simple, etc.)

- Avoid “poisoning the well” by providing biased inputs that are likely to give you biased outputs.

And, finally:

- An LLM is a tool. If you use it properly, it can support what you do. There’s no need to be superstitious about its use.

- Don’t use LLMs as fountains of knowledge or for “ex nihilo” synthesis. LLMs They are excellent regurgitators and reformulators. Learn to use them for those use cases, which can prove very powerful in the hands of competent people!

- Don’t worry about the use of an LLM diluting your work’s value any more than the calculator or Excel or a power tool did for the respective situations in which they are now being deployed, without anybody taking offense at their use.

- Remember: the value of your work lies not in the pain of your toil.